Ansys Fluent GPU Performance Testing – Use Case

Dr.-Ing. Matthias Voß

06.12.2024

Tech Article 24/17 | Glorious Processing Unit or briefly: GPU

Whether in mechanical engineering, aviation or product development, flow simulations are essential for precise and fast development processes. But how do CPU- and GPU-based simulations differ and what are the most important criteria when evaluating hardware? This article highlights fundamental differences, performance metrics and the possible applications of GPU and CPU setups for your CFD projects.

GPU Power | © Adobe Firefly

CPU/GPU – Design and Differences

CPU (Central Processing Unit) and GPU (Graphics Processing Unit) are both processing units, but with different architectures and functions. Technical progress over the past 30 years has shown that future computing tasks can be accomplished by increasing the number of transistors and cores per CPU, rather than by clock rate or single-core computing power (see figure). CPUs have few, but powerful cores that process complex tasks sequentially, while GPUs consist of many so-called Streaming Multiprocessors (SMs). These SMs allow the GPU to manage a large number of Threads simultaneously, making it ideal for simulation-based computing operations.

Nvidia and AMD offer various GPU architectures. Nvidia's SMs consist of numerous CUDA cores that deliver high throughput during simulation execution. AMD offers a flexible architecture with its Compute Units based on ROCm (Radeon Open Compute) and allows for dynamic memory expansion. Starting from the Ansys Release 2024R2, AMD cards can also be used. Currently, Nvidia GPUs are the preferred choice for flow simulations due to better hardware optimization for professional simulation software. The following explanations refer to Nvidia GPUs but are generally applicable to GPUs from other manufacturers.

Trends in chip properties and components up to 2021 | © CADFEM Germany GmbH, source: GitHub

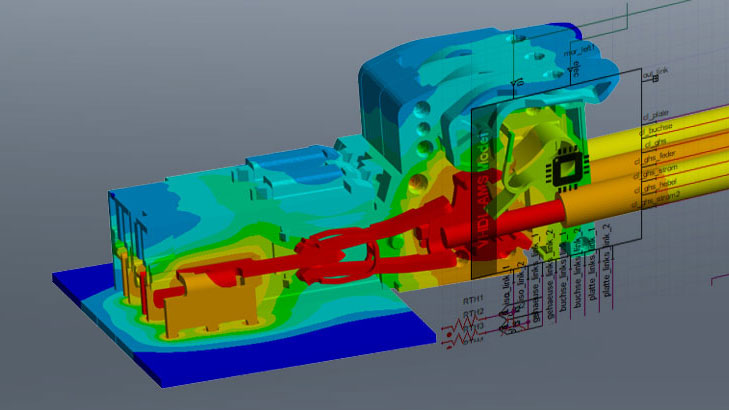

Let's take a fluid dynamics example, such as a valve, with steady, turbulent water flow, and calculate it on both CPU and GPU with different grid sizes to compare the systems. The grid size varies from small (0.65 million elements) to significantly larger (1.0, 3.2, or 10 million elements). Polyhedral elements (including boundary layer resolution) were used in accordance with the necessary diligence of an effective mesh for flow simulation with Ansys Fluent (see figure). But how do we evaluate the calculations afterwards? Larger grids take longer to compute, and more equations are involved. We need an evaluation criterion - a metric, a norm.

Typical internal flow in the example of a valve and the total computation times on various hardware. | © CADFEM Germany GmbH

CPU and GPU compared with MIUPS

In our case, the MIUPS metric serves to quantitatively evaluate the efficiency and performance of a system. For fluid simulation, computational power is measured in Million Iteration-Updates per Second (MIUPS). This metric indicates how many numerical calculations (iterations) the system can perform in a given timeframe (1s) for a specified number of grid elements, providing a basis for comparison between different hardware setups. The higher the MIUPS value, the more efficiently the system operates. It provides relative performance data independent of grid size and total iteration count, enabling comparisons between CPUs and GPUs, as well as various benchmark cases on identical hardware.

As the figure shows, computation times for our valve case increase as expected with increasing grid size (0.6-10 million elements; CPU 5-120 minutes, GPU 1-10 minutes) for both CPU and GPU. The MIUPS value is now the crucial factor. If computation times increase linearly with larger grids, a constant MIUPS value results for the specific case and hardware. Therefore, we examine the slope in computation times and compare this value across hardware systems/calculation cases. For the case considered here, the MIUPS value is approximately 1.5 for CPU hardware and approximately 35 for an A800 card.

The MIUPS values of CPUs are usually lower than those of GPUs. A modern cluster CPU typically achieves 2-20 MIUPS, while GPUs can reach values between 50-100 MIUPS and higher. This higher computational power of the GPU allows for faster simulation execution and shorter development cycles, which is a significant advantage in industrial applications. It is also observed that GPUs fully utilize their acceleration potential only after reaching a certain model size (>1 million elements in the chosen example) – before that, the problem is simply “too small”. Of course, a problem can also be “too large” and not fit into the video memory (VideoRAM, VRAM) of the graphics card. What determines this limit?

Computation times for 1000 iterations for various grid sizes of the valve example and the MIUPS value for the A800 graphics card | © CADFEM Germany GmbH

VRAM vs. RAM and their Importance in CFD

The so-called VRAM (Video-RAM) is the memory that the GPU requires to store data during processing, while RAM (Random Access Memory) serves as the work memory of the CPU. VRAM is optimized for parallel processing and has a high bandwidth tailored to the fast demands of graphics and simulation tasks. In contrast, CPU-RAM is more versatile but less suited for parallel processing. Additionally, it is worth noting that CPU-RAM can be easily upgraded, whereas VRAM is firmly integrated into the architecture of the respective card and cannot be changed. Therefore, only so much “fluid dynamics” fit on a GPU.

Memory demand in GPU-accelerated calculations primarily depends on the size of the simulation grid, the chosen physical models (energy, radiation, turbulence, mesh movement, species, VoF, etc.), and the selected precision (Single/Double). Larger and more complex models make greater demands on VRAM. The VRAM capacity of the graphics card thus limits the size and detail level of the simulation, posing a challenge that also exists in CPU calculations, albeit less frequently, as CPU systems are easier to upgrade

General trend of VRAM demand growth with additional models, settings, and solution settings in Ansys Fluent | © CADFEM Germany GmbH

Specific values for the valve case | © CADFEM Germany GmbH

As a rule of thumb, approximately 1-3 GB of VRAM is required for every million grid elements (see figure). This value increases with varying element types (tet, hex, poly) and, of course, other calculation settings (Energy On/Off, Turbulence, Solid-CHT, Radiation, coupled solver). So, to what extent is a conventional graphics card sufficient, and when are dedicated computing cards needed? Also, how much does such a hardware setup cost?

Ansys CFD

Make fluid flows visible with Computational Fluid Dynamics (CFD). Analyze particle and material flows with Discrete-Element-Method (DEM) and SPH (Smoothed-Particle Hydrodynamics).

Types of GPU – a guideline

For this comparison, we take one card from the Rendering segment (Nvidia RTX A4000) and one from the Computing segment (Nvidia A800). The RTX A4000, a typical desktop graphics card, offers solid Single-Precision performance and is well-suited for smaller to medium-sized computational models. On the other hand, the A800, a computing card, is designed for more complex simulations with high precision and offers higher computing power and higher VRAM capacity. The A800 is suitable for both Single and Double Precision calculations. In Double Precision, it is significantly more powerful than a rendering card, making it a better choice for simulations where Double Precision accuracy is required.

The A4000 is cheaper to acquire than the A800. For applications that don't require Double Precision, the RTX A4000 offers an attractive price-performance ratio. The RTX A4000 represents the class of desktop graphics cards, which can often be found in existing systems within a company. It's worth taking a closer look at these cards because they can already outperform CPU systems with 20-60 cores (see MIUPS or 30 cores = 2h vs. 1 RTX A400 = 24min in the first section). They often represent an untapped computing resource within companies. In general, existing HPC licenses and an upgrade to the Enterprise license required for GPU operation are sufficient to run such a card (see also the last section).

The A800, however, justifies its higher price with better performance and larger VRAM, making it a worthwhile investment for demanding simulations. In many cases, the larger VRAM is the primary selling point, since CFD simulations only run efficiently on a GPU from several million calculation elements, which requires a certain amount of memory. Another important factor is that computing cards come with a cooling system for continuous operation, which is not always the case with rendering cards. The cooler the card stays, the faster it is. But what if even a computing card can't handle the problem? Answer: Many hands make light work - Multi-GPU.

|

|

Rendering Card: |

Computing Card: |

|

|

|

|

|

Performance Double Precision |

- (0.3 TFLOPS, 48 SMs) |

++ (10 TFLOPS, 108 SMs) |

|

Memory |

+ (16 GB) |

+++ (40 GB) |

|

Multi-GPU |

+ |

+++ |

|

Price |

+++ (~1.000 €) |

+ (~17.000 €) |

|

ValveCase RunTime (10. Mio Elemente) |

++ 24 [min] |

++++ 7 [min] |

Comparison of the cards based on performance, VRAM, and purchase price, as well as a comparison of runtimes for the valve test case with 10 million cells. | © CADFEM Germany GmbH

Multi-GPU, NVLink: Everything needs to be fast.

If the level of detail of a CFD simulation is set (grid size), all used models and the accuracy of the computation are known, then the available VRAM of a single card may not be sufficient, and the computation must be performed with these settings on multiple GPU cards with correspondingly more total memory. These cards must communicate with each other at the same speed, so that they are able to work separately and still perform just as well. The communication between the cards can take place over two paths: NVLink or PCI.

NVLink is a high-speed connection developed by Nvidia to accelerate data exchange between multiple GPUs. NVLink transfers data with significantly higher bandwidths than the traditional PCI interface and allows GPUs to efficiently share memory resources, which is beneficial for large simulations that need to process high data volumes. PCI, on the other hand, is the standard connection between the GPU and the motherboard, but it has a lower bandwidth. PCI connections can become a bottleneck for more complex applications, which is why NVLink is often preferred for demanding simulations like fluid analysis with high data requirements.

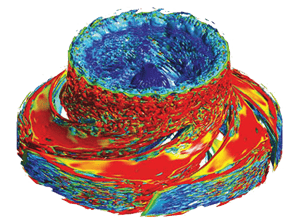

As shown in the figure using a large benchmark case (DrivAer, 250 million elements), Multi-GPU calculations are capable of significantly outperforming large CPU systems (e.g., 512 cores with 64 MIUPS) (2xH200 with 630 MIUPS). The combination of a fast connection (NVLink) with modern GPU architecture (Nvidia Hopper, H100 or H200) has already increased performance by a factor of 10-100 as of 2024, and this trend is set to continue. It can also be seen that the connection between the cards (parallel efficiency) is almost 100% with Ansys Release 2024R2. If existing graphics card systems receive a hardware upgrade (e.g., through additional cards), this can directly result in a performance gain.

Performance Data Comparison 512 - 12 288 Cores; Multi-GPU-Systemes L40, A100, H100, H200 | © Ansys, Inc.

You want to start right away? No problem.

Do you already have a good desktop computer with a modern GPU? Here are the necessary performance data (VRAM and number of SMs).

As the first point of contact for checking existing GPU resources on an existing computer, you will find all relevant information directly in the Fluent-Launcher. After selecting the Enterprise Level, you can now activate the Native GPU Solver under the Solutions category in the options on the right side. After this selection, you will be shown all installed GPU cards including their relevant specifications (type, name, VRAM, SMs) directly. It is recommended to use one CPU per GPU for calculation preparation.

Fluent Launcher mit aktiviertem Native GPU Solver, GPU: RTX A4500, VRAM: 17.18 GB, SM: 46 | © CADFEM Germany GmbH

Unlike CPU cores, which can be freely distributed or selected in parts of the total core count (for example, 30 of 40 cores of a CPU), this does not apply to GPUs. All available computing cores (SMs) of the card are always used, in our launcher example 46 SMs. These SMs must be covered by HPC licenses, analogous to the selected cores of a CPU calculation. The selected basic license (CFD Enterprise) already has 40 SM increments for the use of GPUs. This means, with conventional GPUs in the desktop area, you can get started directly with the CFD Enterprise and an HPC Pack - typical CFD users usually have at least 1 HPC Pack and can start with the GPU immediately. Give it a try!!

Given the current hardware development towards increasingly powerful single-GPU cards (such as Nvidia GraceHopper or Nvidia Blackwell) and consequently also GPU clusters, it can be expected that in the medium term, computational power of around 100 MIUPS will already be available in the desktop area. That is about 10x the computational power of current systems! Large GPU systems, in the range of 1000 - 10 000 MIUPS, will become even more attractive in terms of acquisition, operation and availability compared to corresponding CPU solutions.

Have we piqued your interest? Feel free to approach us with your questions and ideas or take a look directly at our software and hardware offerings for a suitable solution.

Technical Editorial

Dr.-Ing. Marold Moosrainer

Head of Professional Development

+49 (0)8092 7005-45

mmoosrainer@cadfem.de